Trustworthy Code Generation

Walk the Talk + Midjourney

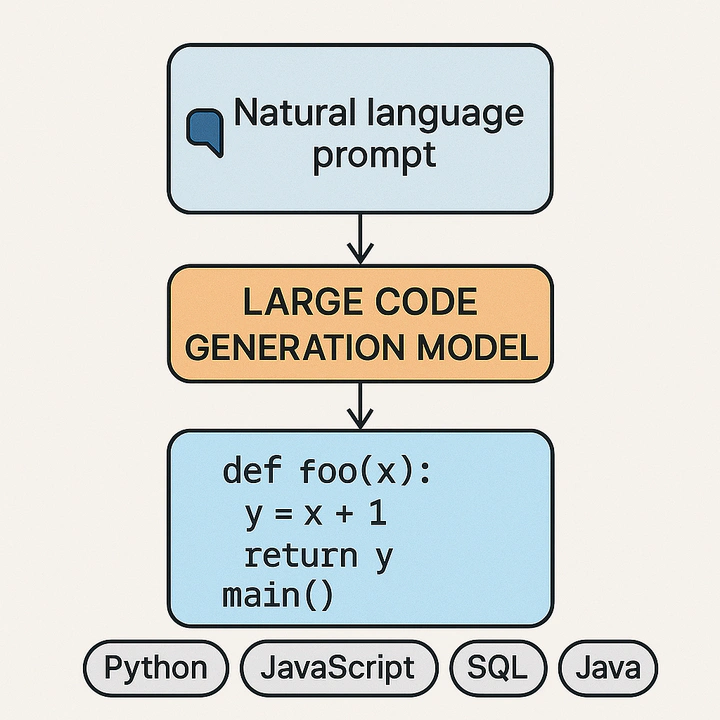

Walk the Talk + MidjourneyLarge code models (LCMs) are popularized as large language models (LLMs) are performant. LCMs may be treated as the same way as the LLMs, but we claim that LCMs have its unique executable property! Would this unique property helps to build LCMs more functional or vulnerability-free? More precisely,

How to learn large code models (LCMs) that are functional (i.e., satisfy user specification) and free-from vulnerability by leveraging the executable property of code?

We leverage traditional static or dynamic program analysis methods, including fuzzing, to specify functional specification (e.g., arXiv'25). Could we make this better?

On-going/Potential Projects

- Code Generator Learning: Align LCMs via RL with unit tests.

- Patch Generation: Learn agentic AI with tool calling to fix github issues.

- Security Patch Generation: Learn agentic AI with tool calling to fix security vulnerabilities.

Do you have creative ideas in making LCMs better or using LCMs for solving other tasks?

Keywords

- Code Generator

- Selective Prediction

- Conformal Prediction